Our eyes tell us that people look different. No one has trouble distinguishing a Czech from a Chinese. But what do those differences mean? Are they biological? Has race always been with us? How does race affect people today?

There's less - and more - to race than meets the eye:

1. Race is a modern idea. Ancient societies, like the Greeks, did not divide people according to physical distinctions, but according to religion, status, class, even language. The English language didn't even have the word 'race' until it turns up in 1508 in a poem by William Dunbar referring to a line of kings.

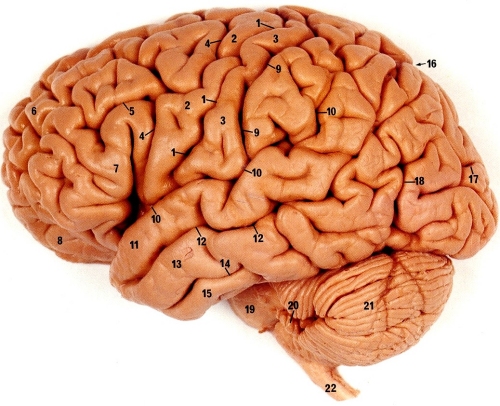

2. Race has no genetic basis. Not one characteristic, trait or even gene distinguishes all the members of one so-called race from all the members of another so-called race.

3. Human subspecies don't exist. Unlike many animals, modern humans simply haven't been around long enough or isolated enough to evolve into separate subspecies or races. Despite surface appearances, we are one of the most similar of all species.

4. Skin color really is only skin deep. Most traits are inherited independently from one another. The genes influencing skin color have nothing to do with the genes influencing hair form, eye shape, blood type, musical talent, athletic ability or forms of intelligence. Knowing someone's skin color doesn't necessarily tell you anything else about him or her.

5. Most variation is within, not between, "races." Of the small amount of total human variation, 85% exists within any local population, be they Italians, Kurds, Koreans or Cherokees. About 94% can be found within any continent. That means two random Koreans may be as genetically different as a Korean and an Italian.

6. Slavery predates race. Throughout much of human history, societies have enslaved others, often as a result of conquest or war, even debt, but not because of physical characteristics or a belief in natural inferiority. Due to a unique set of historical circumstances, ours was the first slave system where all the slaves shared similar physical characteristics.

7. Race and freedom evolved together. The U.S. was founded on the radical new principle that "All men are created equal." But our early economy was based largely on slavery. How could this anomaly be rationalized? The new idea of race helped explain why some people could be denied the rights and freedoms that others took for granted.

8. Race justified social inequalities as natural. As the race idea evolved, white superiority became "common sense" in America. It justified not only slavery but also the extermination of Indians, exclusion of Asian immigrants, and the taking of Mexican lands by a nation that professed a belief in democracy. Racial practices were institutionalized within American government, laws, and society.

9. Race isn't biological, but racism is still real. Race is a powerful social idea that gives people different access to opportunities and resources. Our government and social institutions have created advantages that disproportionately channel wealth, power, and resources to white people. This affects everyone, whether we are aware of it or not.

10. Colorblindness will not end racism. Pretending race doesn't exist is not the same as creating equality. Race is more than stereotypes and individual prejudice. To combat racism, we need to identify and remedy social policies and institutional practices that advantage some groups at the expense of others.

Saturday, November 29, 2008

TEN THINGS EVERYONE SHOULD KNOW ABOUT RACE

Friday, November 28, 2008

Democracy Now tribute to Studs Terkel

The legendary radio broadcaster, writer, oral historian, raconteur and chronicler of our times, Studs Terkel, died last month at the age of ninety-six in his home town of Chicago. Today, a Democracy special tribute: we spend the hour on Studs Terkel. Over the years, Terkel has been a regular guest on Democracy Now! We play a wide-ranging interview we did with him in 2005. We also feature a rare recording of Terkel interviewing the Rev. Martin Luther King at the bedside of the gospel singer Mahalia Jackson. “My curiosity is what saw me through,” Terkel said in 2005. "What would the world be like, or will there be a world? And so, that’s my epitaph. I have it all set. Curiosity did not kill this cat. And it’s curiosity, I think, that has saved me thus far.”

Wednesday, November 26, 2008

New Quantum Weirdness: Balls That Don't Roll Off Cliffs

New Quantum Weirdness: Balls That Don't Roll Off Cliffs

Quantum particles continue to behave in ways traditional particles do not

By George Musser

A good working definition of quantum mechanics is that things are the exact opposite of what you thought they were. Empty space is full, particles are waves, and cats can be both alive and dead at the same time. Recently a group of physicists studied another quantum head spinner. You might innocently think that when a particle rolls across a tabletop and reaches the edge, it will fall off. Sorry. In fact, a quantum particle under the right conditions stays on the table and rolls back.

This effect is the converse of the well-known (if no less astounding) phenomenon of quantum tunneling. If you kick a soccer ball up a hill too slowly, it will come back down. But if you kick a quantum particle up a hill at the same speed, it can make it up and over. The particle will have “tunneled” across (although no actual tunnel is involved). This process explains how particles can escape atomic nuclei, causing radioactive alpha decay. And it is the basis of many electronic devices.

In tunneling, the particle can do something the ball never does. Conversely, the particle might not do something the ball always does. If you kick a soccer ball toward the edge of a cliff, it will always fall off. But if you kick a particle toward the edge, it can bounce back to you. The particle is like one of those little toy robots that senses the edge of a table or staircase and reverses course, except that the particle has no internal mechanism to pull off its stunt. It naturally does the exact opposite of what the forces acting on it would indicate. The researchers behind the analysis—Pedro L. Garrido of the University of Granada in Spain, Jani Lukkarinen of the University of Helsinki, and Sheldon Goldstein and Roderich Tumulka, both at Rutgers University—call this phenomenon “antitunneling.”

In both cases, the explanation lies in the wave nature of particles, which in turn reflects the fact that a quantum particle generally has an ambiguous location. The wave describes the range of locations where it could be found. This wave behaves much like ordinary waves such as sound. Whenever any wave encounters a barrier that is not absolutely rigid, some of the wave will penetrate into the barrier, albeit with diminishing intensity. If the barrier is not too thick, the wave can reemerge on the other side. That is analogous to tunneling.

For antitunneling, the analogy is that whenever any wave encounters any abrupt change of conditions—even ones more favorable to its propagation—some of it will reflect back. Something similar happens when a scuba diver looks up and sees the sea surface acting as a mirror. To be sufficiently abrupt, the distance over which conditions change must be shorter than the wavelength (which for a particle is related to momentum). If the change is too gradual, the wave will simply go along, and the particle will act like a soccer ball after all.

Garrido and his colleagues undertook a numerical analysis to rule out the possibility that the phenomenon was an artifact of idealized assumptions. They also calculated how long a particle will tend to roll around the table before going over the edge; it gets longer the higher the table is. David Griffiths of Reed College, author of a widely used introductory quantum mechanics textbook (the second edition of which gives a version of antitunneling as a student exercise), calls it “a very sweet paradox.” Physicist Frank Wilczek of the Massachusetts Institute of Technology says, “It’s a solid analysis, and it points out an interesting phenomenon I hadn’t been consciously aware of.”

Antitunneling might have applications for building laboratory particle traps, describing nuclear decay or exploring the foundations of quantum mechanics, but its main appeal is to remind physicists how a nearly century-old theory has lost none of its capacity to surprise.

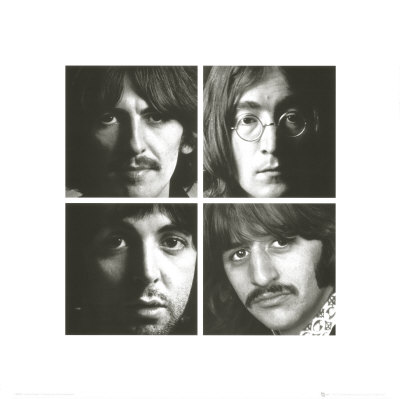

'The White Album' 40 Years Later

When The White Album was released 40 years ago this month, fans were both baffled and awe struck by its sprawling world of sound. It was released as a double LP (almost unheard of at the time) and featured instant classics like "I Will," "While My Guitar Gently Weeps," and "Blackbird." But The White Album (its real name is simply The Beatles) was also filled with songs many found hard to digest, like the eight-minute, experimental sound collage "Revolution 9" or the inexplicably surreal "Wild Honey Pie." On this edition of All Songs Considered host Bob Boilen talks with Bruce Spizer, author of The Beatles On Apple Records, about the groundbreaking White Album and how it came to be.

Thursday, November 20, 2008

How an Anarchist society could function

The author of these videos is an anarcho-capitalist. I would personally tweak a few points and make it much more worker-centric and communal, however, what I'm attracted to in these videos are the problems the left hasn't been able to solve, namely currency and defense/police. I still consider myself very much on the left, but we must stop assuming human nature will change. Whenever we're asked about money or the police in a future, stateless arrangement the best we offer is: "From each according to his abilities to each according to his needs" and "People won't want to commit crimes in the future." Which is just gobbiltygook. No matter how corrupt a person is he or she will respond to incentives and that's what these videos attempt to articulate.

Like it or not a stateless society must be a "free market", a patchwork of communists, syndicalists, agorists, mutualists, capitalists, etc. and that's what will make that future system so beautiful. Don't like capitalism? No problem join a commune, or become a hard-line individualist and journey out into the woods Thoreau style. There can even be a mixing of approaches such as an internally socialistic community with direct democracy and mixed labor roles that sells their commodities on the open market. The concepts are worth your time and merit closer inspection for anyone who upholds liberty as an essential value.

Monopolies

Police

Health Care

Roads

Education

Defense

Courts

Currency

Evilness of Power

From Primitive to Modern

Logical & Historical Arguments

Hierarchical Psychology

Morality, Property & the State

Consumerism, Nationalism & Regale

Propaganda Model p.1

Propaganda Model p.2

Solutions to Capitalist Psychopathy

To Have, To Be, To Die: Wage Slavery

The Triumph of Anarchism

Thursday, November 13, 2008

Tuesday, November 11, 2008

The Life of John Lennon

Peace activist, edgy artist, international icon. It is hard to sum up John Lennon and his influence on fans worldwide. Biographer Philip Norman takes an unflinching look, casting light on a man who shaped a generation's outlook on politics, religion and art.

Monday, November 10, 2008

America the Illiterate

America the Illiterate

We live in two Americas. One America, now the minority, functions in a print-based, literate world. It can cope with complexity and has the intellectual tools to separate illusion from truth. The other America, which constitutes the majority, exists in a non-reality-based belief system. This America, dependent on skillfully manipulated images for information, has severed itself from the literate, print-based culture. It cannot differentiate between lies and truth. It is informed by simplistic, childish narratives and clichés. It is thrown into confusion by ambiguity, nuance and self-reflection. This divide, more than race, class or gender, more than rural or urban, believer or nonbeliever, red state or blue state, has split the country into radically distinct, unbridgeable and antagonistic entities.by Chris Hedges

There are over 42 million American adults, 20 percent of whom hold high school diplomas, who cannot read, as well as the 50 million who read at a fourth- or fifth-grade level. Nearly a third of the nation’s population is illiterate or barely literate. And their numbers are growing by an estimated 2 million a year. But even those who are supposedly literate retreat in huge numbers into this image-based existence. A third of high school graduates, along with 42 percent of college graduates, never read a book after they finish school. Eighty percent of the families in the United States last year did not buy a book.

The illiterate rarely vote, and when they do vote they do so without the ability to make decisions based on textual information. American political campaigns, which have learned to speak in the comforting epistemology of images, eschew real ideas and policy for cheap slogans and reassuring personal narratives. Political propaganda now masquerades as ideology. Political campaigns have become an experience. They do not require cognitive or self-critical skills. They are designed to ignite pseudo-religious feelings of euphoria, empowerment and collective salvation. Campaigns that succeed are carefully constructed psychological instruments that manipulate fickle public moods, emotions and impulses, many of which are subliminal. They create a public ecstasy that annuls individuality and fosters a state of mindlessness. They thrust us into an eternal present. They cater to a nation that now lives in a state of permanent amnesia. It is style and story, not content or history or reality, which inform our politics and our lives. We prefer happy illusions. And it works because so much of the American electorate, including those who should know better, blindly cast ballots for slogans, smiles, the cheerful family tableaux, narratives and the perceived sincerity and the attractiveness of candidates. We confuse how we feel with knowledge.

The illiterate and semi-literate, once the campaigns are over, remain powerless. They still cannot protect their children from dysfunctional public schools. They still cannot understand predatory loan deals, the intricacies of mortgage papers, credit card agreements and equity lines of credit that drive them into foreclosures and bankruptcies. They still struggle with the most basic chores of daily life from reading instructions on medicine bottles to filling out bank forms, car loan documents and unemployment benefit and insurance papers. They watch helplessly and without comprehension as hundreds of thousands of jobs are shed. They are hostages to brands. Brands come with images and slogans. Images and slogans are all they understand. Many eat at fast food restaurants not only because it is cheap but because they can order from pictures rather than menus. And those who serve them, also semi-literate or illiterate, punch in orders on cash registers whose keys are marked with symbols and pictures. This is our brave new world.

Political leaders in our post-literate society no longer need to be competent, sincere or honest. They only need to appear to have these qualities. Most of all they need a story, a narrative. The reality of the narrative is irrelevant. It can be completely at odds with the facts. The consistency and emotional appeal of the story are paramount. The most essential skill in political theater and the consumer culture is artifice. Those who are best at artifice succeed. Those who have not mastered the art of artifice fail. In an age of images and entertainment, in an age of instant emotional gratification, we do not seek or want honesty. We ask to be indulged and entertained by clichés, stereotypes and mythic narratives that tell us we can be whomever we want to be, that we live in the greatest country on Earth, that we are endowed with superior moral and physical qualities and that our glorious future is preordained, either because of our attributes as Americans or because we are blessed by God or both.

The ability to magnify these simple and childish lies, to repeat them and have surrogates repeat them in endless loops of news cycles, gives these lies the aura of an uncontested truth. We are repeatedly fed words or phrases like yes we can, maverick, change, pro-life, hope or war on terror. It feels good not to think. All we have to do is visualize what we want, believe in ourselves and summon those hidden inner resources, whether divine or national, that make the world conform to our desires. Reality is never an impediment to our advancement.

The Princeton Review analyzed the transcripts of the Gore-Bush debates, the Clinton-Bush-Perot debates of 1992, the Kennedy-Nixon debates of 1960 and the Lincoln-Douglas debates of 1858. It reviewed these transcripts using a standard vocabulary test that indicates the minimum educational standard needed for a reader to grasp the text. During the 2000 debates George W. Bush spoke at a sixth-grade level (6.7) and Al Gore at a seventh-grade level (7.6). In the 1992 debates Bill Clinton spoke at a seventh-grade level (7.6), while George H.W. Bush spoke at a sixth-grade level (6.8), as did H. Ross Perot (6.3). In the debates between John F. Kennedy and Richard Nixon the candidates spoke in language used by 10th-graders. In the debates of Abraham Lincoln and Stephen A. Douglas the scores were respectively 11.2 and 12.0. In short, today’s political rhetoric is designed to be comprehensible to a 10-year-old child or an adult with a sixth-grade reading level. It is fitted to this level of comprehension because most Americans speak, think and are entertained at this level. This is why serious film and theater and other serious artistic expression, as well as newspapers and books, are being pushed to the margins of American society. Voltaire was the most famous man of the 18th century. Today the most famous “person” is Mickey Mouse.

In our post-literate world, because ideas are inaccessible, there is a need for constant stimulus. News, political debate, theater, art and books are judged not on the power of their ideas but on their ability to entertain. Cultural products that force us to examine ourselves and our society are condemned as elitist and impenetrable. Hannah Arendt warned that the marketization of culture leads to its degradation, that this marketization creates a new celebrity class of intellectuals who, although well read and informed themselves, see their role in society as persuading the masses that “Hamlet” can be as entertaining as “The Lion King” and perhaps as educational. “Culture,” she wrote, “is being destroyed in order to yield entertainment.”

“There are many great authors of the past who have survived centuries of oblivion and neglect,” Arendt wrote, “but it is still an open question whether they will be able to survive an entertaining version of what they have to say.”

The change from a print-based to an image-based society has transformed our nation. Huge segments of our population, especially those who live in the embrace of the Christian right and the consumer culture, are completely unmoored from reality. They lack the capacity to search for truth and cope rationally with our mounting social and economic ills. They seek clarity, entertainment and order. They are willing to use force to impose this clarity on others, especially those who do not speak as they speak and think as they think. All the traditional tools of democracies, including dispassionate scientific and historical truth, facts, news and rational debate, are useless instruments in a world that lacks the capacity to use them.

As we descend into a devastating economic crisis, one that Barack Obama cannot halt, there will be tens of millions of Americans who will be ruthlessly thrust aside. As their houses are foreclosed, as their jobs are lost, as they are forced to declare bankruptcy and watch their communities collapse, they will retreat even further into irrational fantasy. They will be led toward glittering and self-destructive illusions by our modern Pied Pipers—our corporate advertisers, our charlatan preachers, our television news celebrities, our self-help gurus, our entertainment industry and our political demagogues—who will offer increasingly absurd forms of escapism.

The core values of our open society, the ability to think for oneself, to draw independent conclusions, to express dissent when judgment and common sense indicate something is wrong, to be self-critical, to challenge authority, to understand historical facts, to separate truth from lies, to advocate for change and to acknowledge that there are other views, different ways of being, that are morally and socially acceptable, are dying. Obama used hundreds of millions of dollars in campaign funds to appeal to and manipulate this illiteracy and irrationalism to his advantage, but these forces will prove to be his most deadly nemesis once they collide with the awful reality that awaits us.

Copyright © 2008 Truthdig, L.L.C.

Saturday, November 08, 2008

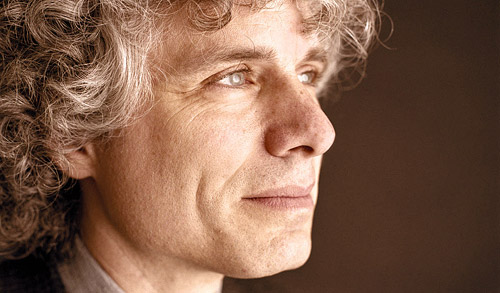

In Depth: Steven Pinker

Steven Pinker currently teaches at Harvard University where he holds the positions of Harvard College Professor and the Johnstone Family Professor in the Department of Psychology. Professor Pinker is the author of seven books, including "The Language Instinct" (1994), "How the Mind Works" (1997), "Words and Rules" (1999), "The Blank Slate: The Modern Denial of Human Nature" (2002), and his latest, "The Stuff of Thought: Language as a Window into Human Nature" (2007).

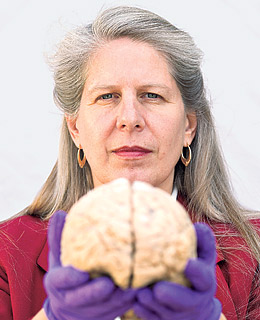

After a Stroke, a Scientist Studies Herself

Neurological researcher Jill Bolte Taylor suffered a stroke 12 years ago. While a stroke is often devastating and sometimes fatal, Taylor was able to make a complete recovery after becoming her own experimental subject.Her new book, My Stroke of Insight: A Brain Scientist's Personal Journey, recounts her experience.

Taylor is a Harvard-trained neuroanatomist. She was named one of Time magazine's 100 Most Influential People in the World for 2008.

She is currently affiliated with the Indiana University School of Medicine in Indianapolis.

Friday, November 07, 2008

When Books Could Change Your Life

When Books Could Change Your LifeWhy What We Pore Over At 12 May Be The Most Important Reading We Ever Do Emily Flake

Emily FlakeBy Tim Kreider

A girl I once caught reading Fahrenheit 451 over my shoulder on the subway confessed: "You know, I'm an English lit major, but I've never loved any books like the ones I loved when I was 12 years old." I fell slightly in love with her when she said that. It was so frank and uncool, and undeniably true.

Let's all admit it: We never got over those first loves. Listen to the difference in the voices of any groups of well-read, overeducated people discussing contemporary fiction, or the greatest books they've ever read, and the voices of those same people, only two drinks later, talking about the books they loved as kids. The Betsy Tacy Books! I loved those books! The Wonderful Flight to the Mushroom Planet! I can't believe you know that! The Little House on the Prairie books! Oh, my God--did you read The Long Winter? So good. Hey--does anyone else remember The Spaceship Under the Apple Tree?

It's not just that these books, unlike adult literature, have been left unsullied by professors turning them into objects of tedious study. We love these books, dearly and uncritically, the way we love the smell of our first girlfriend's perfume, no matter how cheap or tacky it might have been. Let's be honest: We all know that Ulysses and A la recherché du temps perdu are "better" books than The Velveteen Rabbit or The Little Prince, but come on--which would you take with you on a spaceship to salvage from the dying Earth?

Let me put it another way: When was the last time a book changed your life? I don't mean offered you new insights or ideas or moved you--I mean profoundly changed the way you see the world or shaped the kind of person you are? If you're like me, it's been longer than you'd like to admit. I recently read Eli Sagan's Cannibalism: Human Aggression Cultural Form, which enabled me to see capitalism as a highly sublimated form of aggression, on the same continuum as headhunting, warfare, and slavery, and Marcus Aurelius' Meditations, which gave me a greater equanimity about the esteem of others and assuaged my fear of death. But if I ever end up holed up in my parents' farmhouse holding off the bulldozers with a machine gun while listening to Beethoven's late quartets, it'll be because of the story "And the Moon Be Still as Bright" from Ray Bradbury's The Martian Chronicles.

About the last time in our lives when books have this kind of potent effect on us is in our early 20s, which not coincidentally tends to be the age of people you see poring over Nietzsche or that awful Ayn Rand. There's something alarming about this. I don't want to believe that our personalities ossify so much in adulthood that we're no longer capable of being changed by art. But part of the reason art loses its power over us, of course, is, simply and sadly, that we get old; our personalities, as soft, impressionable, and tempting as freshly poured sidewalk cement when young, gradually set and harden over the years with whatever graffiti passers-by scrawled there still indelibly inscribed in it. But when a 14-year-old gushes that the Twilight series are the best books she's ever read in her whole life, it's easy for grownups to forget that this is not necessarily hyperbole. At that age, we haven't heard any clichés, and even dumb ideas are new.

It's not that children's books are pure entertainment, innocent of any didactic goal--what grownups enviously call "Reading for Fun." On the contrary, the reading we do as children may be more serious than any reading we'll ever do again. Books for children and young people are unashamedly prescriptive: They're written, at least in part, to teach us what the world is like, how people are, and how we should behave--as my colleague Megan Kelso (The Squirrel Mother) puts it, "How to be a human being."

There is a level of moral instruction in these books underneath the incidentals of plot, character, and setting that we're constantly absorbing: How would a decent person act in this situation? What would a bad person do? What's the right thing to say to a friend when something terrible happens? The Lord of the Rings books are no more concerned with martial virtues such as loyalty and courage than they are with elaborate codes of courtesy and honorable conduct. Bridge to Terebithia makes this function of literature explicit when Leslie gives Jess The Chronicles of Narnia to read so that he can learn how a prince should behave.

"They're such moral books," Kelso says of the Little House series. "There's so much in them about how a good family should be, how communities help each other, the pioneer spirit, and the morality of the country." We're hardly even aware of this aspect of books when we're children because it's such a basic need; we're ravenous for this information. The exotic details of story and setting are like the sugary frosting on children's cereal; these lessons about life and the world are the real nutriment, the eight essential vitamins and minerals.

Of course, it's also in childhood that we're first exposed to some of life's big shocks and secrets--love and mortality. And the most terrible secret of all is the inevitable syllogism of these two: that the things we love will die. If we're lucky, the first loved ones we lose in this life are imaginary: Charlotte, Old Yeller, Old Dan and Little Ann (Where the Red Fern Grows), Flag (The Yearling), Aslan (though this is sort of a cheat since, like Jesus, he comes back right away), or Leslie Burke (Bridge to Terebithia).

"I think adults tend to forget about the fears of childhood," author Jenny Boylan (She's Not There and I'm Looking Through You) says via e-mail. "I was then and am now drawn to stories that paint a more complicated picture of childhood. Fern, in Charlotte's Web, is poised between childhood and adolescence--she starts off rescuing Wilbur from death (yes, that's right, DEATH WITH AN AX), and yet by story's end she kind of forgets about Wilbur--she and Henry are `off at the fair.' So to speak.

"At story's end, Wilbur's one friend--the wise, illuminating, literate spider--curls up and dies. Wilbur manages to save her egg sac, tends it all winter, and in the spring, the babies hatch out and--IMMEDIATELY LEAVE HIM. Except for a couple of them, who know nothing of Charlotte, and how she saved Wilbur's life. Charlotte's Web was the first book that made me weep, and I wept because I knew that it contained truth."

Sometimes we're not ready for the truths inside these books--they're trying to feed us ideas that are still bigger than our heads. A collection innocuously called The Golden Book of Fun and Nonsense became an object of hysterical fear for me because it contained a chapter of translated Struwwelpeter, those grim German verses about ill-behaved children (they refuse to eat soup, they slam doors) who meet with what are presented as well-deserved, fitting deaths (starvation, getting clocked by a marble bust). These stories are products of the same German child-rearing tradition that produced grownups like Hitler. This chapter had to be paper-clipped shut in order to render the book safe for me. It wasn't the specter of transgression and punishment that was so terrifying to me--it was the casual, brutal fact of death.

Kelso evokes the quality of those children's books that takes on an almost numinous power. "It's magic," she says. "It contains some secret special knowledge for you, and it gives a book a vibe, like it almost scares you, but you keep going back to it again and again." She cites one book that did affect her in adulthood as deeply, and in the same way, as the books that fascinated and frightened her when she was a child: The Face of Battle, by John Keegan, a reconstruction of what several famous historic battles must have been like for the soldiers who fought in them. "I keep going back to it, like it has something to tell me," Kelso says. Like children's books, Keegan is telling her one of life's terrible secrets, a secret about Men and Death that's completely outside her experience as a woman who's lived her whole life in (relative) peacetime.

Even as fully grown adults we remain secretly starved for guidance and instruction. Many of us are walking around with the uneasy feeling that we missed the first day of class and wondering if there are CliffNotes. Most people desperately want someone to tell them what life's about, what people are for, what we're supposed to do--how to be a human being. But serious literature, at least since the 19th-century, has been disdainful of fulfilling any didactic obligation. Sorry, kids, that isn't what art is for.

There is a kind of no man's land in the literary landscape that can't be called "children's" or "young adult"--it's recognized as serious literature, if a little patronizingly, by the adult world--but which has a specific and perennial appeal to adolescents. I'm thinking here of writers such as J.D. Salinger and Kurt Vonnegut Jr., those staples of the college dorm. We reserve a special reverence for these authors that is qualitatively different from the respect, even awe, we feel for undeniably great writers like Toni Morrison or Cormac McCarthy--it's less rational or open to critical discussion. The reaction to revelations of the usual mundane human failings in recent biographies of figures beloved from childhood, such as Ray Bradbury or Charles Schulz, has been not just the surprise or sad worldly shrug we might expect but hostility and denial--a sense that we ought not to have been told such things, as if we'd been told once more that Santa Claus wasn't real or Shoeless Joe threw the series. And Joyce Maynard and Margaret Salinger's troubling memoirs about Salinger--we didn't want to know. Salinger and Vonnegut both give voice to the adolescent passion for justice, their dogmatic, almost fanatical, fairness and decency, and their blooming disgust at the epiphany that the world adults are foisting on them is neither fair nor decent.

Meanwhile, books that unabashedly purport to supply all the answers sell like Hula-Hoops or Viagra. This genre is called "wisdom literature" if it's old enough to be respectable or "self-help" if it's by someone who's still alive and making money off it, and ranges in credibility and earnestness of intention from the Tao te Ching and Aurelius' Meditations to shameless dogshit like The Secret. "Religious" comprises its own category on publishers' best-seller lists, so mammoth and lucrative is this market.

I would suggest that the vast popularity of this genre is because it is effectively children's literature for adults. They address us directly, confidentially, allegedly explaining everything and advising us how to comport ourselves correctly. Even cynical hoaxes (or, to give them the benefit of a doubt, artifacts of clinical delusion) such as The Celestine Prophecy, The Da Vinci Code, and The Shack partake of--or exploit--that same thrill of being let in on a secret, the shiver of magic you remember from the first time you walked farther back in the old wardrobe than the wardrobe went and felt the furs turn to firs against your cheek, or glimpsed an old Victorian house in the fog where none had been the day before, or saw an unearthly glow over the hill out in the old apple orchard. Titles such as The Secret, The Rules, and The Game pretty much say it all: Someone's finally going to initiate us into the select society of Those in the Know, for only $23.95 retail.

These books also frequently appeal to some authority higher than that of mere fellow human beings: the ancients, beneficent aliens, or good old God. (This is how sacred texts always establish their authority: Hey, I didn't make this stuff up; I just wrote it down.) When we're children, all the books we read are handed down to us, like the Ten Commandments, by grownups, who seem like, and sort of are, a different order of being from ourselves. They're the gods of childhood, bigger and older and more experienced; they know more than we do, imparting what wisdom to us they think we can bear, empowered to tell us what to do. I'm over 40 now, no longer by even the most charitable definition a young adult, and I'm starting to realize, in something like panic, that I don't understand anything, and that nobody else seems to know any more about it than I do. There aren't any grownups. And maybe there aren't any secrets left to tell.

Thursday, November 06, 2008

Conversations With History: Chomsky/Hitchens/Johnson/Ehrman/Terkel/Searle

Noam Chomsky

Christopher Hitchens

Chalmers Johnson

Bart D. Ehrman

Studs Terkel

John R. Searle

Tuesday, November 04, 2008

Sunday, November 02, 2008

Saturday, November 01, 2008

Labor Struggle in a Free Market

Labor Struggle in a Free Market

by Kevin Carson

(View Original)One of the most common questions raised about a hypothetical free market society concerns worker protection laws of various kinds. As Roderick Long puts it,

In a free nation, will employees be at the mercy of employers?… Under current law, employers are often forbidden to pay wages lower than a certain amount; to demand that employees work in hazardous conditions (or sleep with the boss); or to fire without cause or notice. What would be the fate of employees without these protections?

Long argues that, despite the absence of many of today’s formal legal protections, the shift of bargaining power toward workers in a free labor market will result in “a reduction in the petty tyrannies of the job world.”

Employers will be legally free to demand anything they want of their employees. They will be permitted to sexually harass them, to make them perform hazardous work under risky conditions, to fire them without notice, and so forth. But bargaining power will have shifted to favor the employee. Since prosperous economies generally see an increase in the number of new ventures but a decrease in the birth rate, jobs will be chasing workers rather than vice versa. Employees will not feel coerced into accepting mistreatment because it will be so much easier to find a new job. And workers will have more clout, when initially hired, to demand a contract which rules out certain treatment, mandates reasonable notice for layoffs, stipulates parental leave, or whatever. And the kind of horizontal coordination made possible by telecommunications networking opens up the prospect that unions could become effective at collective bargaining without having to surrender authority to a union boss.

This last is especially important. Present-day labor law limits the bargaining power of labor at least as much as it reinforces it. That’s especially true of reactionary legislation like Taft-Hartley and state right-to-work laws. Both are clearly abhorrent to free market principles.

Taft-Hartley, for example, prohibited many of the most successful labor strategies during the CIO organizing strikes of the early ’30s. The CIO planned strikes like a general staff plans a campaign, with strikes in a plant supported by sympathy and boycott strikes up and down the production chain, from suppliers to outlets, and supported by transport workers refusing to haul scab cargo. At their best, the CIO’s strikes turned into regional general strikes.

Right-wing libertarians of the vulgar sort like to argue that unions depend primarily on the threat of force, backed by the state, to exclude non-union workers (see here and here). Without forcible exclusion of scabs, they say, strikes would almost always turn into lockouts and union defeats. Although this has acquired the status of dogma at Mises.Org, it’s nonsense on stilts. The primary reason for the effectiveness of a strike is not the exclusion of scabs, but the transaction costs involved in hiring and training replacement workers, and the steep loss of productivity entailed in the disruption of human capital, institutional memory, and tacit knowledge.

With the strike is organized in depth, with multiple lines of defense — those sympathy and boycott strikes at every stage of production — the cost and disruption have a multiplier effect far beyond that of a strike in a single plant. Under such conditions, even a large minority of workers walking off the job at each stage of production can be quite effective.

Taft-Hartley greatly reduced the effectiveness of strikes at individual plants by prohibiting such coordination of actions across multiple plants or industries. Taft-Hartley’s cooling off periods also gave employers advance warning time to prepare for such disruptions, and greatly reduced the informational rents embodied in the training of the existing workforce. Were such restrictions on sympathy and boycott strikes in suppliers [not] in place, today’s “just-in-time” economy would likely be far more vulnerable to disruption than that of the 1930s.

But long before Taft-Hartley, the labor law regime of the New Deal had already created a fundamental shift in the form of labor struggle.

Before Wagner and the NLRB-enforced collective bargaining process, labor struggle was less focused on strikes, and more focused on what workers did in the workplace itself to exert leverage against management. They focused, in other words, on what the Wobblies call “direct action on the job”; or in the colorful phrase of a British radical workers’ daily at the turn of the century, “staying in on strike.” The reasoning was explained in the Wobbly Pamphlet “How to Fire Your Boss: A Worker’s Guide to Direct Action“:

The bosses, with their large financial reserves, are better able to withstand a long drawn-out strike than the workers. In many cases, court injunctions will freeze or confiscate the union’s strike funds. And worst of all, a long walk-out only gives the boss a chance to replace striking workers with a scab (replacement) workforce.

Workers are far more effective when they take direct action while still on the job. By deliberately reducing the boss’ profits while continuing to collect wages, you can cripple the boss without giving some scab the opportunity to take your job.

Such tactics included slowdowns, sick-ins, random one-day walkouts at unannounced intervals, working to rule, “good work” strikes, and “open mouth sabotage.” Labor followed, in other words, a classic asymmetric warfare model. Instead of playing by the enemy’s rules and suffering one honorable defeat after another, they played by their own rules and mercilessly exploited the enemy’s weak points.

The whole purpose of the Wagner regime was to put an end to this asymmetric warfare model. As Thomas Ferguson and G. William Domhoff have both argued, corporate backing for the New Deal labor accord came mainly from capital-intensive industry — the heart of the New Deal coalition in general. Because of the complicated technical nature of their production processes and their long planning horizons, their management required long-term stability and predictability. At the same time, because they were extremely capital-intensive, labor costs were a relatively modest part of total costs. Management, therefore, was willing to trade significant wage increases and job security for social peace on the job. Wagner came about, not because the workers were begging for it, but because the bosses were begging for a regime of enforceable labor contracts.

The purpose of the Wagner regime was to divert labor away from the asymmetric warfare model to a new one, in which union bureaucrats enforced the terms of contracts on their own membership. The primary function of union bureaucracies, under the new order, was to suppress wildcat action by their rank and file, to suppress direct action on the job, and to limit labor action to declared strikes under NLRB rules.

The New Deal labor agenda had the same practical effect as telling the militiamen at Lexington and Concord to come out from behind the rocks, put on bright red uniforms, and march in parade ground formation, in return for a system of arbitration to guarantee they didn’t lose all the time.

The problem is that the bosses decided, long ago, that labor was still winning too much of the time even under the Wagner regime. Their first response was Taft-Hartley and the right-to-work laws. From that point on, union membership stopped growing and then began a slow and inexorable process of decline that continues to the present day. The process picked up momentum around 1970, when management decided that the New Deal labor accord had outlived its usefulness altogether, and embraced the full union-busting potential under Taft-Hartley in earnest. But the official labor movement still foregoes the weapons it lay down in the 1930s. It sticks to wearing its bright red uniforms and marching in parade-ground formation, and gets massacred every time.

Labor needs to reconsider its strategy, and in particular to take a new look at the asymmetric warfare techniques it has abandoned for so long.

The effectiveness of these techniques is a logical result of the incomplete nature of the labor contract. According to Michael Reich and James Devine,

Conflict is inherent in the employment relation because the employer does not purchase a specified quantity of performed labor, but rather control over the worker’s capacity to work over a given time period, and because the workers’ goals differ from those of the employer. The amount of labor actually done is determined by a struggle between workers and capitalists.

The labor contract is incomplete because it is impossible for a contract to specify, ahead of time, the exact levels of effort and standards of performance expected of workers. The specific terms of the contract can only be worked out in the contested terrain of the workplace.

The problem is compounded by the fact that management’s authority in the workplace isn’t exogenous: that is, it isn’t enforced by the external legal system, at zero cost to the employer. Rather, it’s endogenous: management’s authority is enforced entirely with the resources and at the expense of the company. And workers’ compliance with directives is frequently costly — and sometimes impossible — to enforce. Employers are forced to resort to endogenous enforcement

when there is no relevant third party…, when the contested attribute can be measured only imperfectly or at considerable cost (work effort, for example, or the degree of risk assumed by a firm’s management), when the relevant evidence is not admissible in a court of law…[,] when there is no possible means of redress…, or when the nature of the contingencies concerning future states of the world relevant to the exchange precludes writing a fully specified contract.

In such cases the ex post terms of exchange are determined by the structure of the interaction between A and B, and in particular on the strategies A is able to adopt to induce B to provide the desired level of the contested attribute, and the counter strategies available to B….

An employment relationship is established when, in return for a wage, the worker B agrees to submit to the authority of the employer A for a specified period of time in return for a wage w. While the employer’s promise to pay the wage is legally enforceable, the worker’s promise to bestow an adequate level of effort and care upon the tasks assigned, even if offered, is not. Work is subjectively costly for the worker to provide, valuable to the employer, and costly to measure. The manager-worker relationship is thus a contested exchange. [Samuel Bowles and Herbert Gintis, "Is the Demand for Workplace Democracy Redundant in a Liberal Economy?," in Ugo Pagano and Robert Rowthorn, eds., Democracy and Effciency in the Economic Enterprise.]

Since it is impossible to define the terms of the contract exhaustively up front, “bargaining” — as Oliver Williamson puts it — “is pervasive.”

The classic illustration of the contested nature of the workplace under incomplete labor contracting, and the pervasiveness of bargaining, is the struggle over the pace and intensity of work, reflected in both the slowdown and working to rule.

At its most basic, the struggle over the pace of work is displayed in what Oliver Williamson calls “perfunctory cooperation” (as opposed to consummate cooperation):

Consummate cooperation is an affirmative job attitude–to include the use of judgment, filling gaps, and taking initiative in an instrumental way. Perfunctory cooperation, by contrast, involves job performance of a minimally acceptable sort…. The upshot is that workers, by shifting to a perfunctory performance mode, are in a position to “destroy” idiosyncratic efficiency gains.

He quotes Peter Blau and Richard Scott’s observation to the same effect:

…[T]he contract obligates employees to perform only a set of duties in accordance with minimum standards and does not assure their striving to achieve optimum performance…. [L]egal authority does not and cannot command the employee’s willingness to devote his ingenuity and energy to performing his tasks to the best of his ability…. It promotes compliance with directives and discipline, but does not encourage employees to exert effort, to accept responsibilities, or to exercise initiative.

Legal authority, likewise, “does not and cannot” proscribe working to rule, which is nothing but obeying management’s directives literally and without question. If they’re the brains behind the operation, and we get paid to shut up and do what we’re told, then by God that’s just what we’ll do.

Disgruntled workers, Williamson suggests, will respond to intrusive or authoritarian attempts at surveillance and monitoring with a passive-aggressive strategy of compliance in areas where effective metering is possible — while shifting their perfunctory compliance (or worse) into areas where it is impossible. True to the asymmetric warfare model, the costs of management measures for verifying compliance are generally far greater than the costs of circumventing those measures.

As frequent commenter Jeremy Weiland says, “You are the monkey wrench“:

Their need for us to behave in an orderly, predictable manner is a vulnerability of theirs; it can be exploited. You have the ability to transform from a replaceable part into a monkey wrench.

At this point, some libertarians are probably stopping up their ears and going “La la la la, I can’t hear you, la la la la!” Under the values most of us have been encultured into, values which are reinforced by the decidely pro-employer and anti-worker libertarian mainstream, such deliberate sabotage of productivity and witholding of effort are tantamount to lèse majesté.

But there’s no rational basis for this emotional reaction. The fact that we take such a viscerally asymmetrical view of the respective rights and obligations of employers and employees is, itself, evidence that cultural hangovers from master-servant relationships have contaminated our understanding of the employment relation in a free market.

The employer and employee, under free market principles, are equal parties to the employment contract. As things normally work now, and as mainstream libertarianism unfortunately take for granted, the employer is expected as a normal matter of course to take advantage of the incomplete nature of the employment contract. One can hardly go to Cato or Mises.Org on any given day without stumbling across an article lionizing the employer’s right to extract maximum effort in return for minimum pay, if he can get away with it. His rights to change the terms of the employment relation, to speed up the work process, to maximize work per dollar of wages, are his by the grace of God.

Well, if the worker and employer really are equal parties to a voluntary contract, as free market theory says they are, then it works both ways. The worker’s attempts to maximize his own utility, under the contested terms of an incomplete contract, are every bit as morally legitimate as those of the boss. The worker has every bit as much of a right to attempt to minimize his effort per dollar of wages as the boss has to attempt to maximize it. What constitutes a fair level of effort is entirely a subjective cultural norm, that can only be determined by the real-world bargaining strength of bosses and workers in a particular workplace.

And as Kevin Depew argues, the continued barrage of downsizing, speedups, and stress will likely result in a drastic shift in workers’ subjective perceptions of a fair level of effort and of the legitimate ways to slow down.

Productivity, like most “financial virtues,” is the product of positive social mood trends.

As social mood transitions to negative, we can expect to see less and less “virtue” in hard work.

Think about it: real wages are virtually stagnant, so it’s not as if people have experienced real reward for their work.

What has been experienced is an unconscious and shared herding impulse trending upward; a shared optimistic mood finding “joy” and “happiness” in work and denigrating the sole pursuit of leisure, idleness.

If social mood has, in fact, peaked, we can expect to see a different attitude toward work and productivity emerge.

The problem is that, to date, bosses have fully capitalized on the potential of the incomplete contract, whereas workers have not. And the only thing preventing workers from doing so is the little boss inside their heads, the cultural holdover from master-servant days, that tells them it’s wrong to do so. I aim to kill that little guy. And I believe that when workers fully realize the potential of the incomplete labor contract, and become as willing to exploit it as the bosses have all these years, we’ll mop the floor with their asses. And we can do it in a free market, without any “help” from the NLRB. Let the bosses beg for help.

One aspect of direct action that especially interests me is so-called “open-mouth sabotage,” which (like most forms of networked resistance) has seen its potential increased by several orders of magnitude by the Internet.

Labor struggle, at least the kind conducted on asymmetric warfare principles, is just one subset of the general category of networked resistance. In the military realm, networked resistance is commonly discussed under the general heading of Fourth Generation Warfare.

In the field of radical political activism, networked organization represents a quantum increase in the “crisis of governability” that Samuel Huntington complained of in the early ’70s. The coupling of networked political organization with the Internet in the ’90s was the subject of a rather panic-stricken genre of literature at the Rand Corporation, most of it written by David Ronfeldt and John Arquilla. The first major Rand study on the subject concerned the Zapatistas’ global political support network, and was written before the Seattle demos. Loosely networked coalitions of affinity groups, organizing through the Internet, could throw together large demonstrations with little notice, and “swarm” government and mainstream media with phone calls, letters, and emails far beyond their capacity to absorb. Given this elite reaction to what turned out to be a mere foreshadowing, the Seattle demonstrations of December 1999 and the anti-globalization demonstrations that followed must have been especially dramatic. There is strong evidence (which I discussed here) that the “counter-terrorism” powers sought by Clinton, and by the Bush administration after 9/11, were desired by federal law enforcement mainly to go after the anti-globalization movement.

Let’s review just what was entailed in the traditional technique of “open-mouth sabotage.” From the same Wobbly pamphlet quoted above:

Sometimes simply telling people the truth about what goes on at work can put a lot of pressure on the boss. Consumer industries like restaurants and packing plants are the most vulnerable. And again, as in the case of the Good Work Strike, you’ll be gaining the support of the public, whose patronage can make or break a business.

Whistle Blowing can be as simple as a face-to-face conversation with a customer, or it can be as dramatic as the P.G.&E. engineer who revealed that the blueprints to the Diablo Canyon nuclear reactor had been reversed. Upton Sinclair’s novel The Jungle blew the lid off the scandalous health standards and working conditions of the meatpacking industry when it was published earlier this century.

Waiters can tell their restaurant clients about the various shortcuts and substitutions that go into creating the faux-haute cuisine being served to them. Just as Work to Rule puts an end to the usual relaxation of standards, Whistle Blowing reveals it for all to know.

The Internet has increased the potential for “open mouth sabotage” by several orders of magnitude.

The first really prominent example of the open mouth, in the networked age, was the so-called McLibel case, in which McDonalds used a SLAPP lawsuit to suppress pamphleteers highly critical of their company. Even in the early days of the Internet, bad publicity over the trial and the defendants’ savvy use of the trial as a platform, drew far, far more negative attention to McDonalds than the pamphleteers could have done without the company’s help.

In 2004, the Sinclair Media and Diebold cases showed that, in a world of bittorrent and mirror sites, it was literally impossible to suppress information once it had been made public. As recounted by Yochai Benkler, Sinclair Media resorted to a SLAPP lawsuit to stop a boycott campaign against their company, aimed at both shareholders and advertisers, over their airing of an anti-Kerry documentary by the SwiftBoaters. Sinclair found the movement impossible to suppress, as the original campaign websites were mirrored faster than they could be shut down, and the value of their stock imploded. As also reported by Benkler, Diebold resorted to tactics much like those the RIAA uses against file-sharers, to shut down sites which published internal company documents about their voting machines. The memos were quickly distributed, by bittorrent, to more hard drives than anybody could count, and Diebold found itself playing whack-a-mole as the mirror sites displaying the information proliferated exponentially.

One of the most entertaining cases involved the MPAA’s attempt to suppress DeCSS, Jon Johansen’s CSS descrambler for DVDs. The code was posted all over the blogosphere, in a deliberate act of defiance, and even printed on T-shirts.

In the Alisher Usmanov case, the blogosphere lined up in defense of Craig Murray, who exposed the corruption of post-Soviet Uzbek oligarch Usmanov, against the latter’s attempt to suppress Murray’s site.

Finally, in the recent Wikileaks case, a judge’s order to disable the site

didn’t have any real impact on the availability of the Baer documents. Because Wikileaks operates sites like Wikileaks.cx in other countries, the documents remained widely available, both in the United States and abroad, and the effort to suppress access to them caused them to rocket across the Internet, drawing millions of hits on other web sites.

This is what’s known as the “Streisand Effect”: attempts to suppress embarrassing information result in more negative publicity than the original information itself.

The Streisand Effect is displayed every time an employer fires a blogger (the phenomenon known as “Doocing,” after the first prominent example of it) over embarrassing comments about the workplace. The phenomenon has attracted considerable attention in the mainstream media. In most cases, employers who attempt to suppress embarrassing comments by disgruntled workers are blindsided by the much, much worse publicity resulting from the suppression attempt itself. Instead of a regular blog readership of a few hundred reading that “Employer X Sucks,” the blogosphere or a wire service picks up the story, and tens of millions of people read “Blogger Fired for Revealing Employer X Sucks.” It may take a while, but the bosses will eventually learn that, for the first time since the rise of the large corporation and the broadcast culture, we can talk back –- and not only is it absolutely impossible to shut us up, but we’ll keep making more and more noise the more they try to do so.

To grasp just how breathtaking the potential is for open-mouth sabotage, and for networked anti-corporate resistance by consumers and workers, just consider the proliferation of anonymous employernamesucks.com sites. The potential results from the anonymity of the writeable web, the comparative ease of setting up anonymous sites (through third country proxy servers, if necessary), and the possibility of simply emailing large volumes of embarrassing information to everyone you can think of whose knowledge might be embarrassing to an employer.

Regarding this last, it’s pretty easy to compile a devastating email distribution list with a little Internet legwork. You might include the management of your company’s suppliers, outlets, and other business clients, reporters who specialize in your industry, mainstream media outlets, alternative news outlets, worker and consumer advocacy groups, corporate watchdog organizations specializing in your industry, and the major bloggers who specialize in such news. If your problem is with the management of a local branch of a corporate chain, you might add to the distribution list all the community service organizations your bosses belong to, and CC it to corporate headquarters to let them know just how much embarrassment your bosses have caused them. The next step is to set up a dedicated, web-based email account accessed from someplace secure. Then it’s pretty easy to compile a textfile of all the dirt on their corruption and mismanagement, and the poor quality of customer service (with management contact info, of course). The only thing left is to click “Attach,” and then click “Send.” The barrage of emails, phone calls and faxes should hit the management suite like an A-bomb.

So what model will labor need to follow, in the vacuum left by the near total collapse of the Wagner regime and the near-total defeat of the establishment unions? Part of the answer lies with the Wobbly “direct action on the job” model discussed above. A great deal of it, in particular, lies with the application of “open mouth sabotage” on a society-wide scale as exemplified by cases like McLibel, Sinclair, Diebold, and Wikileaks, described above.

Another piece of the puzzle has been suggested by the I.W.W.’s Alexis Buss, in her writing on “minority unionism”:

If unionism is to become a movement again, we need to break out of the current model, one that has come to rely on a recipe increasingly difficult to prepare: a majority of workers vote a union in, a contract is bargained. We need to return to the sort of rank-and-file on-the-job agitating that won the 8-hour day and built unions as a vital force….

Minority unionism happens on our own terms, regardless of legal recognition….

U.S. & Canadian labor relations regimes are set up on the premise that you need a majority of workers to have a union, generally government-certified in a worldwide context[;] this is a relatively rare set-up. And even in North America, the notion that a union needs official recognition or majority status to have the right to represent its members is of relatively recent origin, thanks mostly to the choice of business unions to trade rank-and-file strength for legal maintenance of membership guarantees.

The labor movement was not built through majority unionism-it couldn’t have been.

How are we going to get off of this road? We must stop making gaining legal recognition and a contract the point of our organizing….

We have to bring about a situation where the bosses, not the union, want the contract. We need to create situations where bosses will offer us concessions to get our cooperation. Make them beg for It.

But more than anything, the future is being worked out in the current practice of labor struggle itself. We’re already seeing a series of prominent labor victories resulting from the networked resistance model.

The Wal-Mart Workers’ Association, although it doesn’t have an NLRB-certified local in a single Wal-Mart store, is a de facto labor union. And it has achieved victories through “associates” picketing and pamphleting stories on their own time, through swarming via the strategic use of press releases and networking, and through the same sort of support network that Ronfeldt and Arquilla remarked on in the case of the pro-Zapatista campaign. By using negative publicity to emabarrass the company, the Association has repeatedly obtained concessions from Wal-Mart. Even a conventional liberal like Ezra Klein understands the importance of such unconventional action.

The Coalition of Imolakee Workers, a movement of Indian agricultural laborers who supply many of the tomatoes used by the fast food industry, has used a similar support network, with the coordinated use of leaflets and picketing, petition drives, and boycotts, to obtain major concessions from Taco Bell, McDonalds, Burger King, and KFC. Blogger Charles Johnson provides inspiring details here and here.

In another example of open-mouth sabotage, the IWW-affiliated Starbucks union publicly embarrassed Starbucks Chairman Howard Schultz. It organized a mass email campaign, notifying the board of a co-op apartment he was seeking to buy into of his union-busting activities.

Such networked labor resistance is making inroads even in China, the capitalist motherland of sweatshop employers. Michel Bauwens, at P2P Blog, quotes a story from the Taiwanese press:

“The factory closure last November was a scenario that has been repeated across southern China, where more than 1,000 shoe factories — about a fifth of the total — have closed down in the past year. The majority were in Houjie, a concrete sprawl on the outskirts of Dongguan known as China’s “Shoe Town.”

“In the past, workers would just swallow all the insults and humiliation. Now they resist,” said Jenny Chan, chief coordinator of the Hong Kong-based pressure group Students and Scholars against Corporate Misbehavior, which investigates factory conditions in southern China.

“They collect money and they gather signatures. They use the shop floors and the dormitories to gather the collective forces to put themselves in better negotiating positions with factory owners and managers,” she said.

Technology has made this possible.

“They use their mobile phones to receive news and send messages,” Chan said “Internet cafes are very important, too. They exchange news about which cities or which factories are recruiting and what they are offering, and that news spreads very quickly.”

As a result, she says, factories are seeing huge turnover rates. In Houjie, some factories have tripled workers’ salaries, but there are still more than 100,000 vacancies.”

The AFL-CIO’s Lane Kirkland once suggested, half-heartedly, that things would be easier if Congress repealed all labor laws, and let labor and management go at it “mano a mano.” It’s time to take this proposal seriously. So here it is — a free market proposal to employers:

We give you the repeal of Wagner, of the anti-yellow dog provisions of Norris-LaGuardia, of legal protections against punitive firing of union organizers, and of all the workplace safety, overtime, and fair practices legislation. You give us the repeal of Taft-Hartley, of the Railway Labor Relations Act and its counterparts in other industries, of all state right-to-work laws, and of SLAPP lawsuits. All we’ll leave in place, out of the whole labor law regime, is the provisions of Norris-LaGuardia taking intrusion by federal troops and court injunctions out of the equation.

And we’ll mop the floor with your asses.